Igniting Cognitive Combustion: Fusing Cognitive Architectures and LLMs to Build the Next Computer

“A system is never the sum of its parts, it's the product of their interactions”

Russell Ackoff.

We tend to fixate on the latest gadgets and algorithms, yet technology alone does not drive real-world change. True progress depends on the systems that integrate technologies into a solution. Individual tools are a means to an end; systems are the end itself.

Let's consider this example. Humans have harnessed the power of combustion for centuries without unlocking its full potential. We've lit fires, carried torches, and inhabited remote parts of the planet. But extracting its potential took architecting an intricate dance – the internal combustion engine – to generate explosions that spin cranks and drive pistons. Though engine components have advanced dramatically, the conceptual design endures because it produces results far beyond what the parts could achieve.

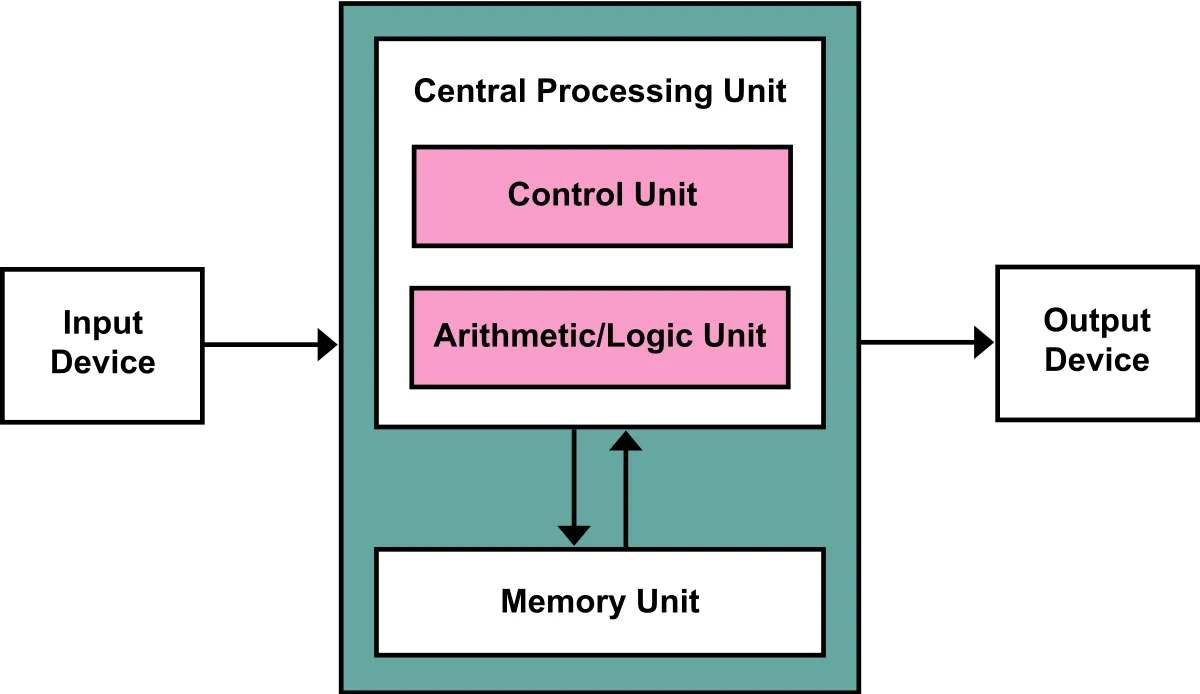

Likewise, in computing, progress owes much to systems thinking. In 1945, mathematician John von Neumann assembled memory, input, output, control, and processing into an integrated architecture for solving computational problems. This system – not any one component – gave rise to modern computing. Seventy-eight years later, though technologies are very different, von Neumann’s principles remain largely intact. Today’s vastly more powerful computers are structurally similar to their 1940s ancestors because architecture, not components alone, shapes how real-world problems are solved.

The von Neumann architecture (source Wikipedia)

We're on the cusp of another rethink, this time driven by the rise of language models. But before we get to that, let's look at what we're working with.

Symbolic and Sub-Symbolic

For most of us, technology implies logical, explainable systems based on symbols like code, numbers, and language. We give computers explicit symbolic instructions, and they deterministically follow our recipes to produce expected outputs. These rule-based systems are the yang to the yin of messy, unstructured sub-symbolic systems.

Sub-symbolic systems like neural networks mimic biological neurons' distributed, parallel nature. They emerge answers from the uncoordinated activities of individual nodes, just as colonies emerge from ants following simple patterns. While symbolic systems are crafted by human logic, sub-symbolic systems identify patterns and make fuzzy associations on their own. Their results seem magical but opaque compared to symbolic, transparent processes.

Yet, like the yin-yang symbol, our minds unite these dual modes. Our symbolic faculties let us craft and follow rules, build logical arguments, and communicate clearly through language. Our sub-symbolic faculties recognize faces, sense emotions, and move smoothly through the messy physical world.

Square Peg. Round Hole.

Our rule-bound world has encountered an unruly stranger – the large language model (LLM). We demand our technologies behave logically and produce consistent results, yet LLMs think probabilistically, seeing possibilities where we see dead-ends. We dismiss their creativity as hallucinatory, judging through the square lens of symbolic thinking. But the future requires an engine that can ignite both symbolic and subsymbolic in cognitive combustion, harnessing their energy to drive us to new computational heights.

The good news is scientists have been working on this for a while. Who wouldn't want to crack the code of making machines act like humans?

Cognitive Architectures

Since the 1950s, researchers have pursued "cognitive architectures" - systems that can reason broadly, gain insights, adapt to change, and even reflect on their own thinking.

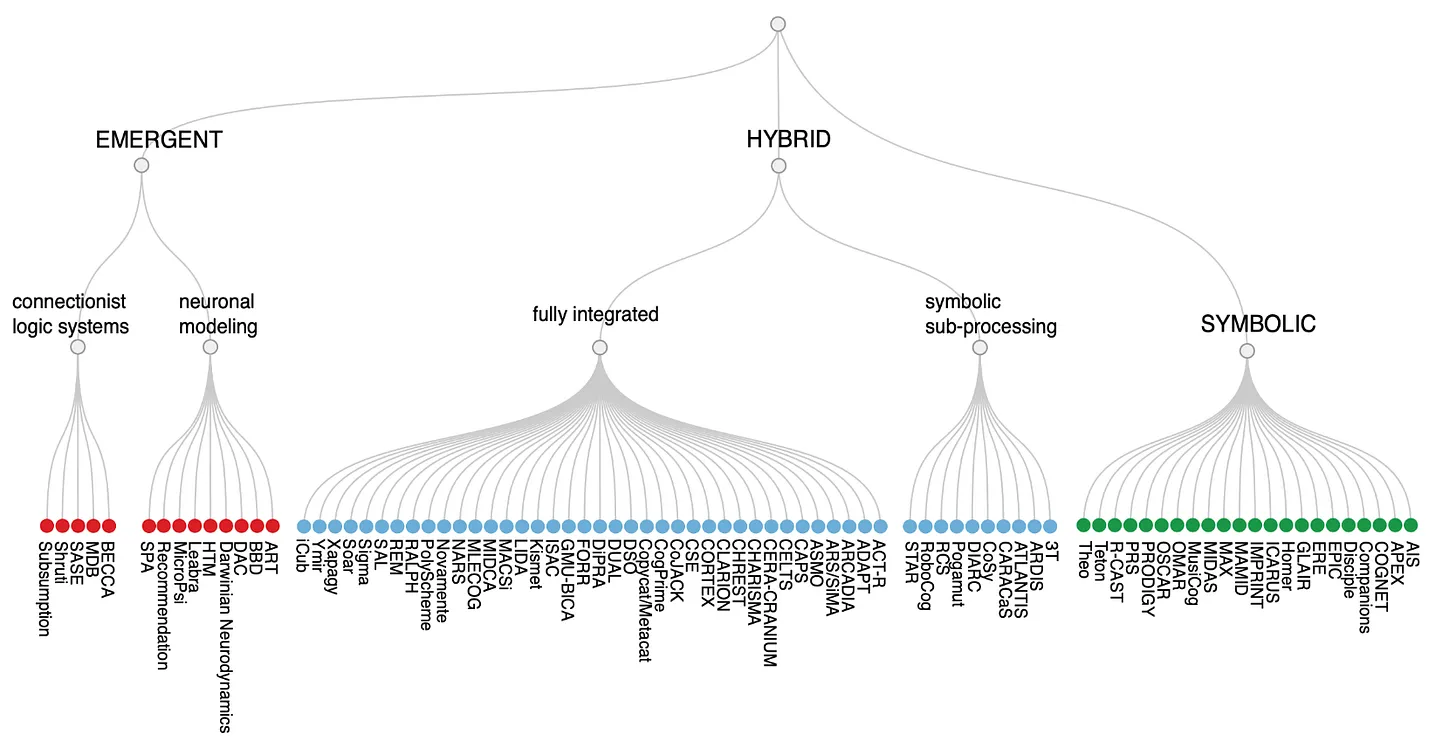

After decades of research, cognitive architectures have proliferated to over 300 proposals. In their review, Kosteruba and Tsotsos analyzed 84 architectures, organizing them into a taxonomy. They found architectures spanned from biologically-inspired neural networks to rigid symbolic rule systems, with many hybrids in between.

Taxonomy of Cognitive Architectures: Source Kosteruba & Tsotsos

Common Model of Cognition

Thankfully we don't have to parse through all of these models. In 2017, John Laird and colleagues proposed a "standard model of mind" to provide a common framework for discussing these architectures. Their model synthesizes key concepts from three pioneering architectures:

ACT-R, developed by John Anderson since 1976, models cognition through interacting procedural and declarative memories. Procedural memory encodes skills and routines, while declarative memory stores facts. By following procedures to access and apply declarative knowledge, ACT-R demonstrates how the interplay between "knowing that" and "knowing how" enables intelligent behavior.

Soar, created by John Laird in the 1980s, provides a unified rule-based system spanning perception, learning, planning, problem-solving, and decision-making. "Productions" represent knowledge as condition-action rules operating on a working memory. Soar simulates the mind's flexible, goal-directed abilities by dynamically following productions.

Sigma, conceived by Polk and Newell in 1988, sees the mind as an asynchronous society of behaviors. Mental modules pass messages that trigger other behaviors, continuing until desires are satisfied or abandoned. This "behavior generation and management" loop gives rise to complex, adaptive behavior.

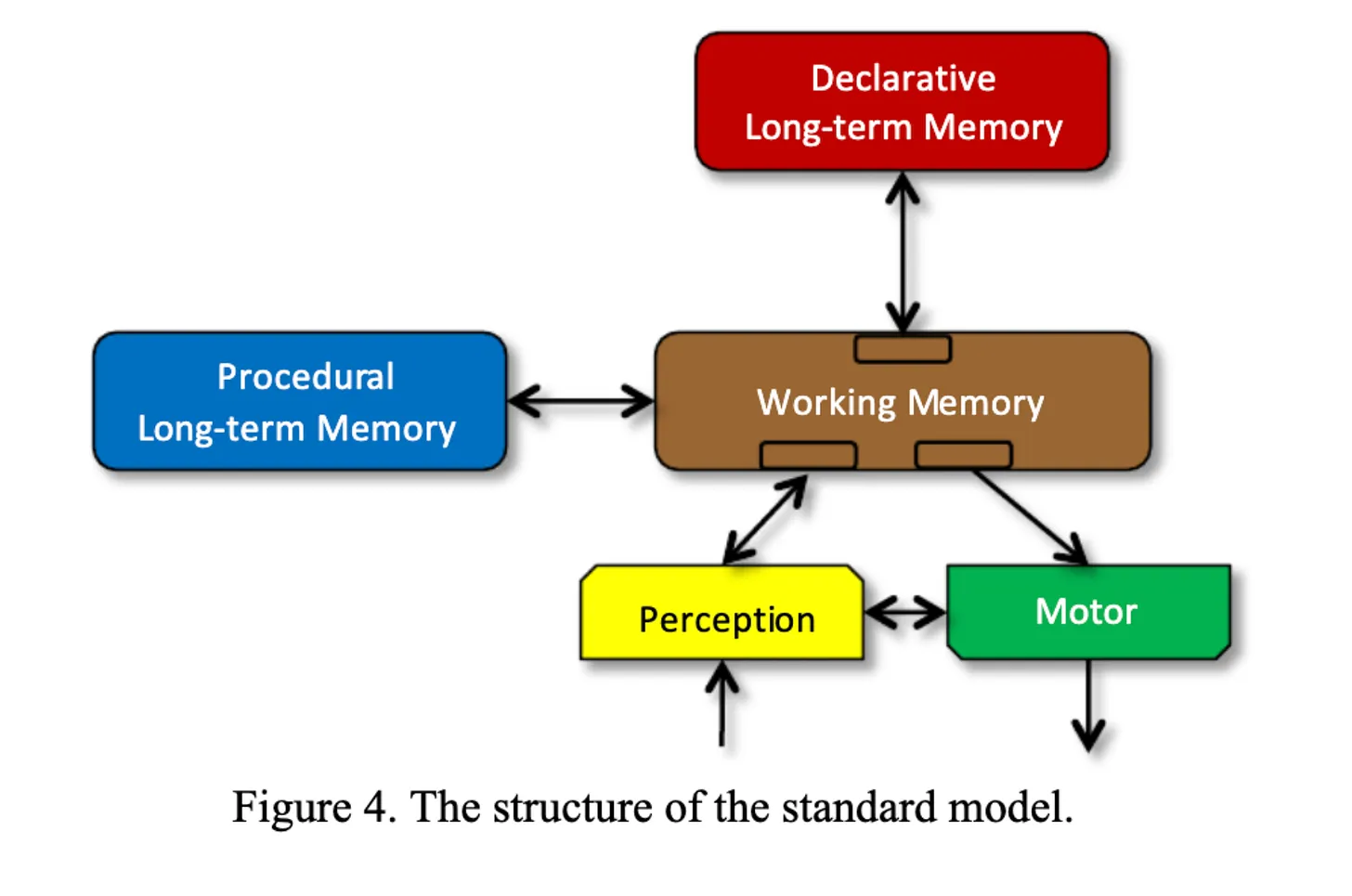

The standard model of mind comprises three interactive memory systems:

Declarative memory is our knowledge base, storing facts and concepts we can consciously declare. It retains information like your favorite color, historical events, traffic signs, or vocabulary. Declarative memory provides the raw material for thought, which other systems draw upon.

Procedural memory encodes skills and routines, the "how-to" knowledge enabling behavior. It allows us to ride bikes, tie shoes or play instruments. Procedural memory translates knowledge into action.

Working memory is our mental workspace, temporarily retaining and manipulating information to enable planning, problem-solving, and decision-making. When solving a math problem, working memory holds numbers, operations, and intermediate steps until a solution emerges. It integrates accessing and applying knowledge from declarative and procedural memory.

In this model, there isn't a controller; instead, it is driven by a 'cognitive cycle.' Like the clock cycle in computers, this "cognitive cycle" drives the operation of the system by executing procedures to achieve goals.

Driving a car, for example, requires:

Declarative knowledge of signs, controls, and routes

Procedural skills for driving

Working memory to monitor conditions and react in the moment

Integrating Language Models into the Standard Model of Mind

The standard model articulated key faculties before the current boom in language models. While it's still early, today's agent frameworks (AutoGPT, BabyAGI, etc) have created some of these capabilities.

Rather than distinct memory modules, these frameworks access external knowledge sources through vector search. Perception and motor modules are implemented as API calls and plugins. The language model's context window is the temporary working memory.

A key gap remains: robust learning. Systems today lack a mechanism for accumulating knowledge and skills continuously from experience. They rely on prompt and model tuning instead. The next breakthrough will be integrating a mechanism that enables continuous learning from interactions, accumulating knowledge and skills on the fly - like human minds!

A New Recipe for Progress? Crafting Cognitive Architectures

Today we find ourselves with new ingredients - language models, vector search, APIs - and old recipes for intelligence from cognitive architectures. Whether these can be stirred into a new system with capabilities beyond any single part remains unclear. History offers examples of recipes that endured, like von Neumann's computing framework or the internal combustion engine. But for every visionary recipe, there were many failed concoctions.

One thing is clear. When we figure out this new 'cognition engine,' it will propel progress more profoundly than any technology before it.

Want these insights delivered? Subscribe below.