CPUs or Operating Systems? How LLMs are sparking a new platform battle

In our ever-evolving technological landscape, it's only natural for us to relate to new advancements through familiar mental models. When OpenAI introduced their language model via an API, it was tempting to view them as a service provider, like Twilio. After all, just as Twilio provided a foundation upon which app developers could build communication apps, OpenAI's language models (LLMs) appeared to be the foundational service enabling developers to create a new breed of AI applications.

As LLMs began to unveil their potential, we began to envision them for use cases beyond simple text completion. Our collective imagination soared as we contemplated architecting a new kind of AI-driven app with LLMs as a core component.

In January 2023, Madrona noted in their blog

"These larger models have shown emergent capabilities of complex reasoning, reasoning with knowledge, and out-of-distribution robustness, none of which are present in smaller, more specialized models. These big ones are called foundation models because developers can build applications on top of them."

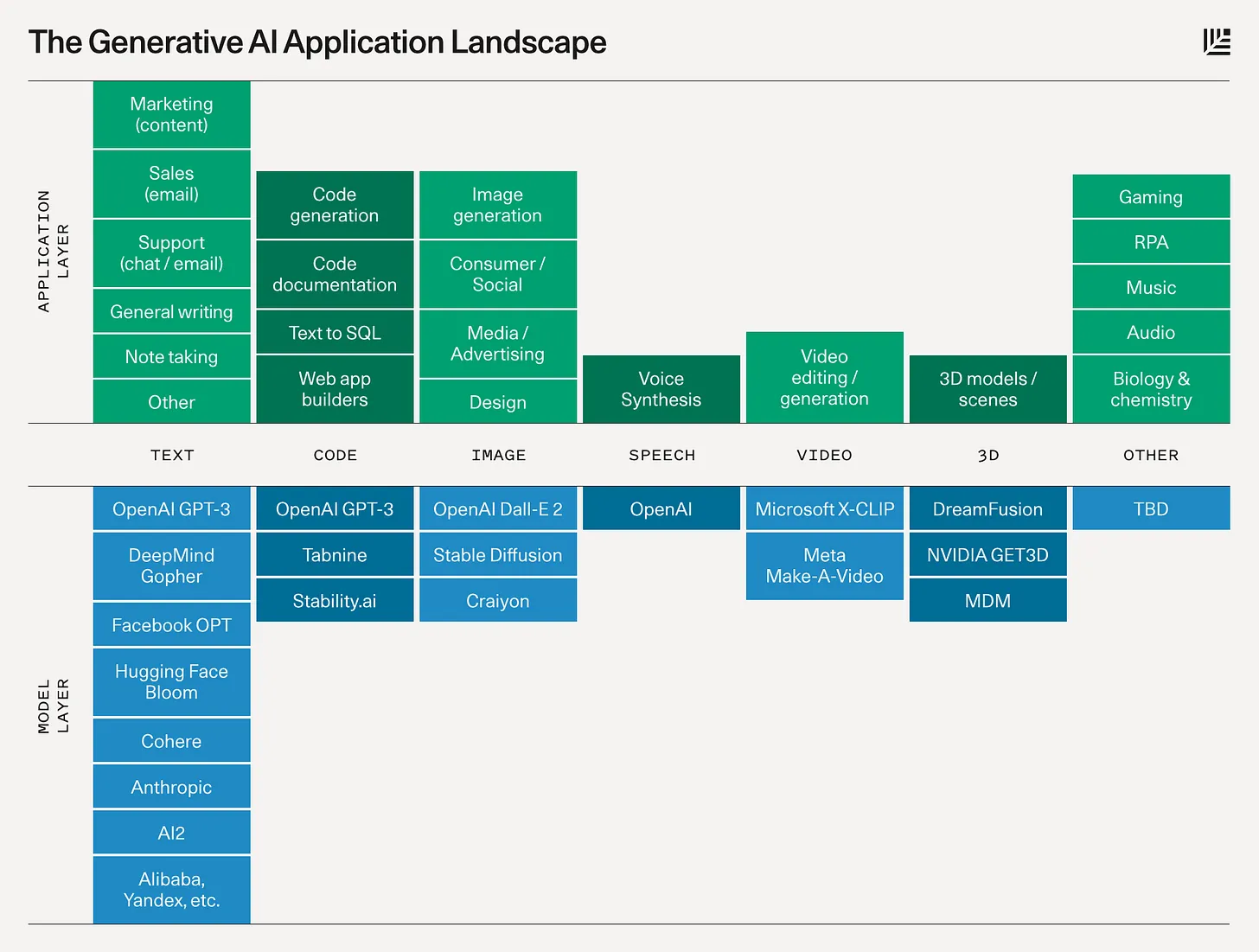

Sequoia’s Generative AI Landscape

Around the same time, Sequoia also released their Generative AI landscape, which followed a familiar framework of application and infrastructure layers. In this framework, they observed a competition between OpenAI, Cohere, and Anthropic to establish themselves as the new compute layer for AI applications. In this context, LLMs neatly fit into an existing paradigm, akin to CPUs in traditional computing.

LLMs as CPUs

In articles such as "LLMs are the new CPUs" by Nathan Baschez at Every (March 2023), the comparison was highlighted.

"Today we are seeing the emergence of a new kind of “central processor” that defines performance for a wide variety of applications. But instead of performing simple, deterministic operations on 1’s and 0’s, these new processors take natural language (and now images) as their input and perform intelligent probabilistic reasoning, returning text as an output"

SaaS companies could now claim they were more than just a wrapper around LLMs, they were essential to apply this new kind of CPU to business problems.

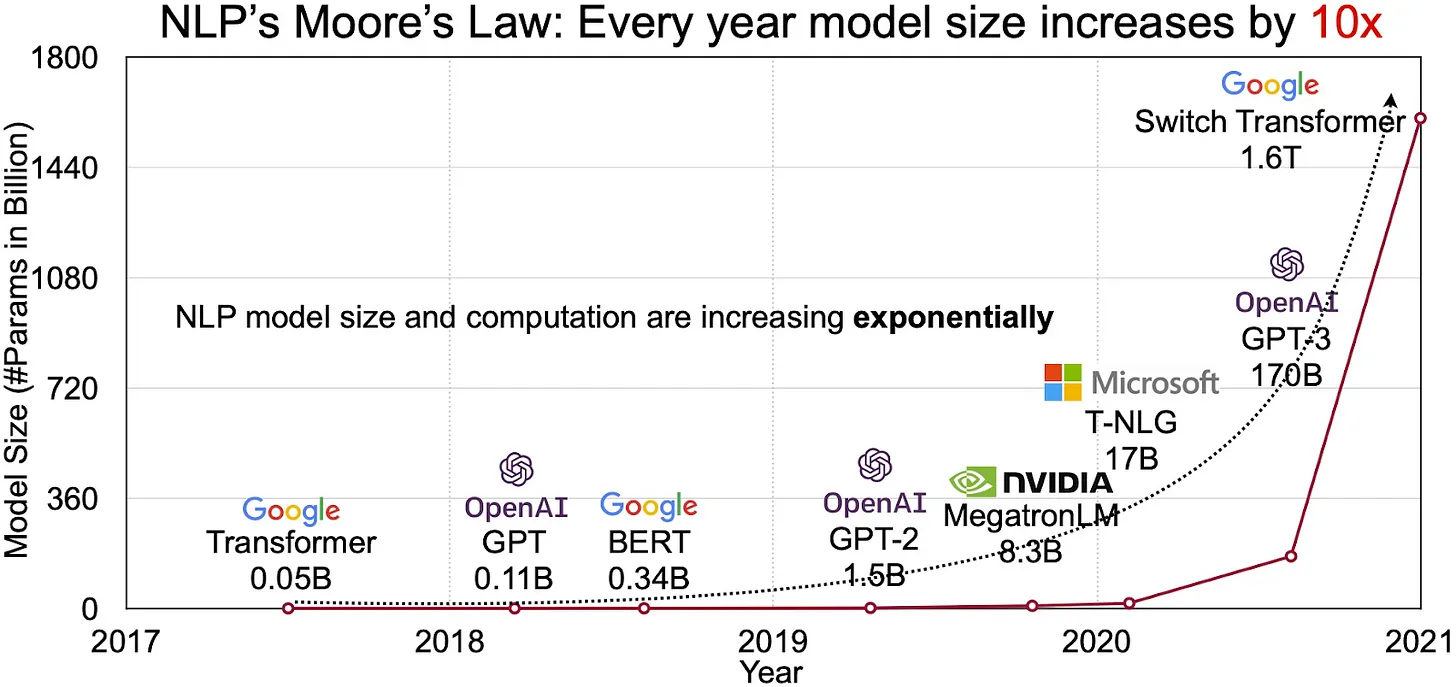

Inspired by this analogy, we began drawing comparisons between models based on their size, much like we did with CPUs. This approach seamlessly aligned with our existing mental models.

Consequently, graphs, such as the one depicted below, began surfacing, drawing correlations between the growth in the number of parameters and the renowned Moore's Law governing the advancement of chips. (Model size has since been dismissed as the key metric. i.e. bigger isn't always better)

Source: India AI

LLMs as the Operating System

However, OpenAI took a different approach with the launch of ChatGPT in late 2022—a move that has since become the stuff of legends. By offering their API through a chat interface, OpenAI not only provided developers with a convenient way to try out the API but also opened the doors for direct engagement with ChatGPT by everyday users. The response was nothing short of astounding, with ChatGPT reaching one million users within five days and now boasting an impressive user base of 100 million. Moreover, the OpenAI website itself attracts over one billion visits per month.

Taking a page out of Apple's playbook, OpenAI looked to the remarkable success of the App Store as inspiration. Originally starting with a modest selection of 500 apps in 2008, the App Store has since transformed into a juggernaut, paying out a staggering total of $320 billion to developers. In Apple's latest quarterly earnings, their Services division, which includes the App Store, reported a revenue of $20 billion.

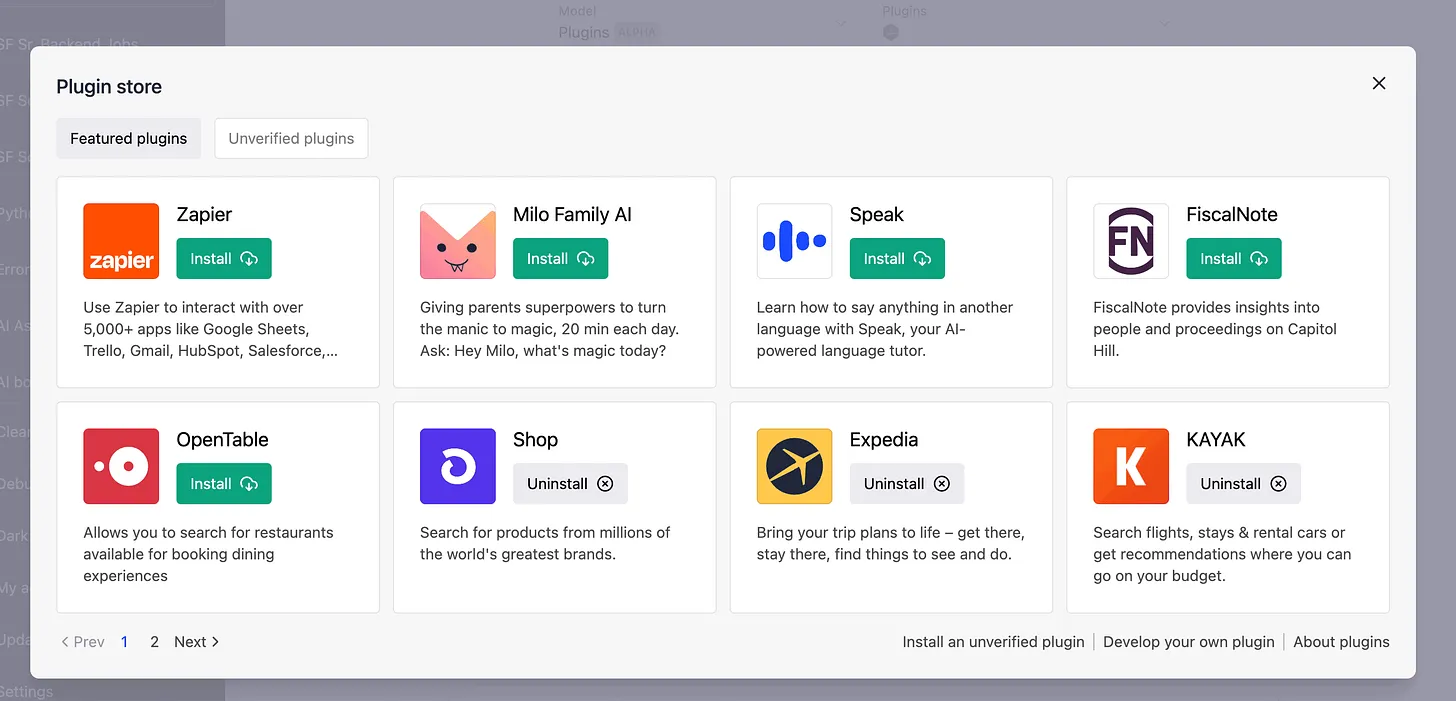

Drawing from this inspiration, OpenAI disrupted the CPU paradigm for certain use cases by introducing a plugin store. In doing so, they set their sights on the most crucial aspect in the equation—the customer experience.

ChatGPT Plugin Store

ChatGPT Plugin Store

The battle to own the user experience

“In a world of intense competition and nonstop disruption, the user(customer) experience is the only sustainable competitive advantage.”

Kerry Bodine, Forrester analyst

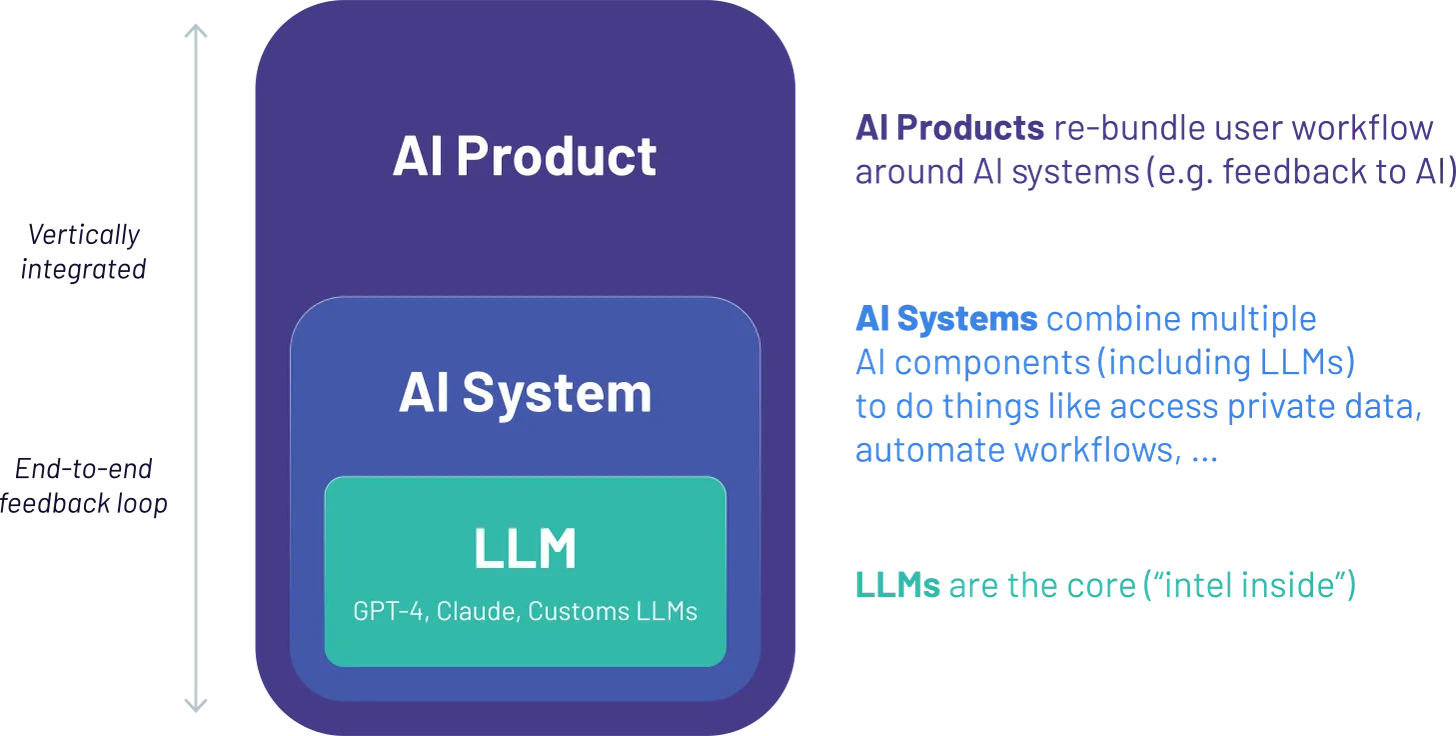

Plugins invert the CPU paradigm into an Operating System paradigm. Now the LLM isn't a CPU but the interface through which you access app functionality. The apps are now inside the LLM box. In this paradigm, you're not on OpenTable's site using their AI functionality. You are in ChatGPT using OpenTable's APIs without even visiting their site. Want to look up a recipe on AllRecipes and add it to your Instacart? You can do it all within the comfort of your ChatGPT interface.

Now OpenAI owns the user experience (like Apple does with iOS) and acts as the mediator, determining which apps are available to end users. By leveraging text as the primary interface, navigating functionality becomes a breeze. Even small user interface components can be rendered into ChatGPT, placing OpenAI in control of the overall user experience.

But OpenAI is not alone in this endeavor. Microsoft's BingChat (based on OpenAI's GPT) has announced its own set of plugins. Their strategic objective? To put OpenAI back in its box and seize ownership of the user experience.

Even Intel didn't want to be put in a box

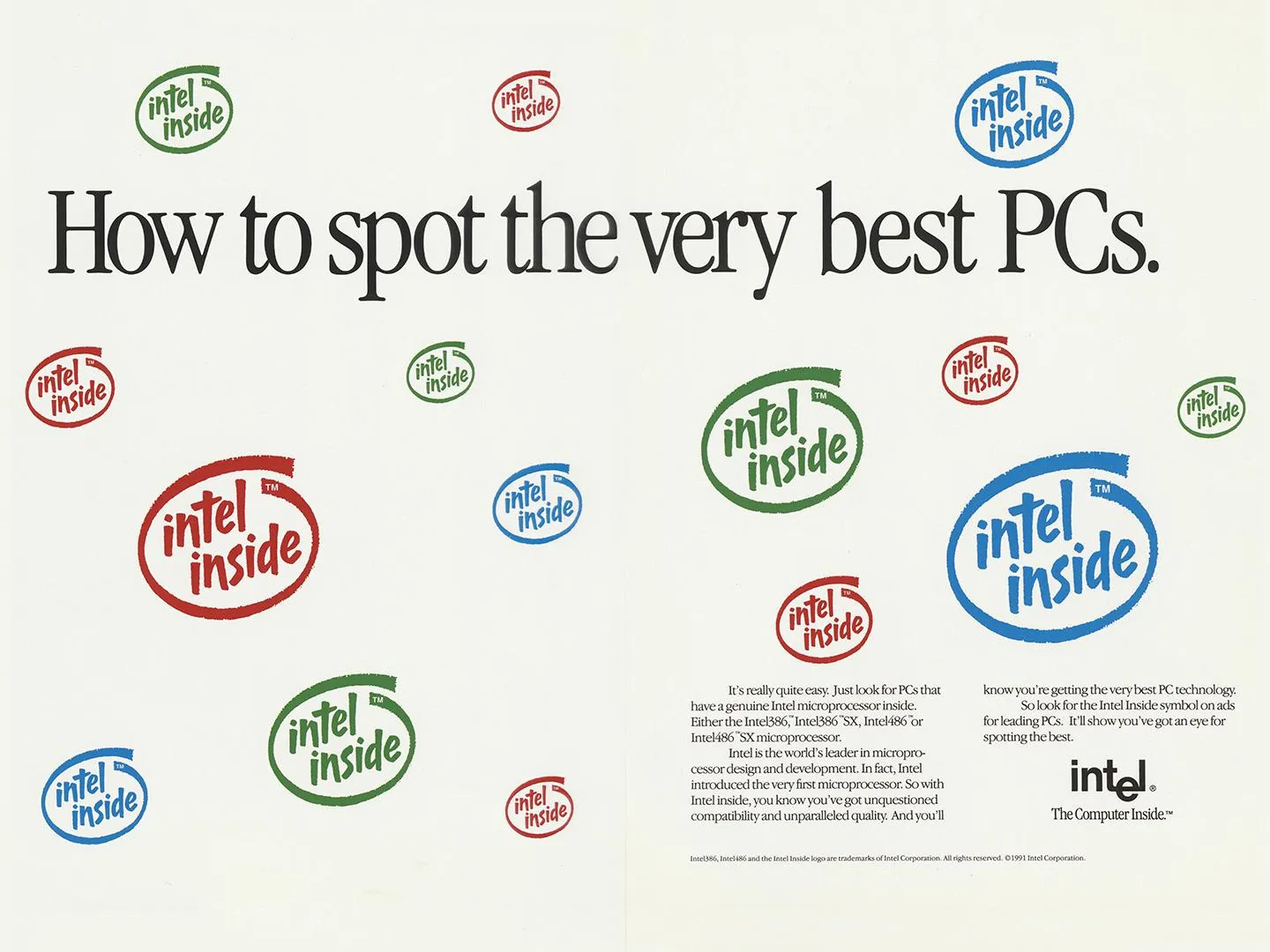

When you think of CPUs, Intel is probably the first name that pops into your head. It's pretty amazing how a company that makes something most people never lay eyes or hands on has become so widely known. In the early 2000s, Intel ranked as the sixth most valuable brand in the world, right up there with big shots like Coke and Disney!

As a CPU manufacturer, Intel's immediate customers were design engineers at companies that made personal computers. But Intel didn't want to rest their fate on the design engineer's choice.

So, in 1991, Andy Grove challenged his marketing chief, Dennis Carter to come up with a way to promote Intel directly. Dennis borrowed the idea of cooperative marketing from the consumer goods industry and created the iconic Intel Inside campaign.

A 2002 Harvard Business Review case study noted that in 2001 alone, approximately 150 million Intel Inside stickers were printed, and more than $1.5 billion in Intel Inside advertising was generated.

We're in the midst of a rebundling

AI's ability to chat with users using natural language opens up an exciting opportunity to revamp how we interact with technology. We've seen a similar showdown happen before during the browser and mobile OS wars.

In this game-changing situation, the winners get to be the new gatekeepers. Just like how Apple dominates the app ecosystem, this shift promises massive riches for the gatekeeper, making it a prize worth fighting for.

Of course, that doesn't mean the API business isn't important or interesting. It's just not the biggest prize up for grabs.

Want these insights delivered? Subscribe below.